|

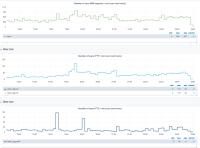

Adding the last rows from storm-backend-metrics.log:

23:25:55.133 - synch.ls [(count=71952082, m1_rate=78.74230957953807, m5_rate=203.3783220703492, m15_rate=284.32058517739483) (max=18110.076702, min=9.054869, mean=1398.911431029295, p95=6628.170163, p99=18110.076702)] duration_units=milliseconds, rate_units=events/minute

23:25:55.133 - synch.mkdir [(count=651915, m1_rate=0.4489344991993962, m5_rate=0.9996813382802947, m15_rate=2.938306089529111) (max=2262.6094129999997, min=8.964582, mean=926.3620534192959, p95=1142.15647, p99=1142.15647)] duration_units=milliseconds, rate_units=events/minute

23:25:55.133 - synch.mv [(count=0, m1_rate=0.0, m5_rate=0.0, m15_rate=0.0) (max=0.0, min=0.0, mean=0.0, p95=0.0, p99=0.0)] duration_units=milliseconds, rate_units=events/minute

23:25:55.134 - synch.pd [(count=8128479, m1_rate=1.0014775881691327, m5_rate=17.513433207045587, m15_rate=32.15343161896282) (max=2382.4285, min=11.087868, mean=86.09382842079452, p95=704.231577, p99=704.231577)] duration_units=milliseconds, rate_units=events/minute

23:25:57.574 - synch.ping [(count=11263507, m1_rate=14.672591592351536, m5_rate=29.61748000620723, m15_rate=45.35940069146042) (max=727.672752, min=0.037839, mean=30.988702910969955, p95=0.38009899999999996, p99=727.672752)] duration_units=milliseconds, rate_units=events/minute

23:25:57.575 - synch.rf [(count=3108144, m1_rate=2.275478611197182, m5_rate=6.041267684828509, m15_rate=11.073244392699149) (max=13846.969083999998, min=6.743231, mean=5238.839677417935, p95=13846.969083999998, p99=13846.969083999998)] duration_units=milliseconds, rate_units=events/minute

23:25:57.575 - synch.rm [(count=7264060, m1_rate=2.428741662549194, m5_rate=21.789814532777854, m15_rate=17.79838731835939) (max=2384.177084, min=12.280045999999999, mean=442.59246254776775, p95=2175.954425, p99=2175.954425)] duration_units=milliseconds, rate_units=events/minute

23:25:57.575 - synch.rmDir [(count=3660, m1_rate=1.77863632503E-312, m5_rate=8.89318162514E-312, m15_rate=2.6679544875427E-311) (max=24.527711999999998, min=8.412077, mean=8.738221413680268, p95=8.738203, p99=8.738203)] duration_units=milliseconds, rate_units=events/minute

23:25:57.576 - fs.aclOp [(count=347290508, m1_rate=61.53443396193107, m5_rate=712.8503403158022, m15_rate=1340.8594276225072) (max=2140.193092, min=0.034564, mean=14.023937659411207, p95=1.769826, p99=831.6180529999999)] duration_units=milliseconds, rate_units=events/minute

23:26:02.005 - fs.fileAttributeOp [(count=176295161, m1_rate=74.14077847272269, m5_rate=514.7531431957868, m15_rate=816.7100446664926) (max=2.4630829999999997, min=0.014825999999999999, mean=0.057977962956402264, p95=0.166902, p99=0.237734)] duration_units=milliseconds, rate_units=events/minute

23:26:02.680 - ea [(count=458897915, m1_rate=581.2318458871677, m5_rate=2025.9262383826863, m15_rate=2524.3492604568687) (max=2197.537074, min=0.022029999999999998, mean=18.1119945157166, p95=1.3073629999999998, p99=714.6460069999999)] duration_units=milliseconds, rate_units=events/minute

|